A very disturbing trend is emerging among the American electorate: the willingness to engage in illegal voting practices “to prevent the other side from winning.”

According to a brand-new poll from The Heartland Institute and Rasmussen Reports, nearly three in 10 voters admit they would engage in at least one kind of illegal voting act in the 2024 election “to help stop” either President Joe Biden or former President Donald Trump from being re-elected.

Specifically, 28 percent of Americans admit they would commit at least one of the following illegal actions in the 2024 election: vote in multiple states; destroy mail-in ballots of friends or family members; pay or offer rewards to fellow voters; deliberately provide incorrect information about the place, time, or date associated with casting a ballot; and/or alter candidate selections on mail-in ballots.

Unfortunately, this is a bipartisan problem as both Democrats and Republicans are ready and willing to engage in voter fraud in the upcoming election simply to ensure that their preferred candidate emerges victorious.

So, why is this happening?

One of the reasons that I believe more voters than ever are prone to engage in illegal voting is because the federal government wields vast powers, far beyond what the Founding Fathers envisioned when they drafted the Constitution. There is so much at stake with modern presidential elections that both sides believe if they lose, the other side will reap all of the benefits of controlling the executive branch for four long years.

Another reason I think nearly 30 percent of Americans admit they would engage in election mischief is because they have simply lost faith in the veracity of U.S. elections.

The 2020 election was a gamechanger in the United States as we now know that several government agencies participated in election interference efforts, such as squashing the blockbuster Hunter Biden laptop story. Of course, the mainstream media and social media platforms also put their thumbs on the 2020 election scale by censoring information that would have almost assuredly changed voters minds in the weeks and days before the election took place.

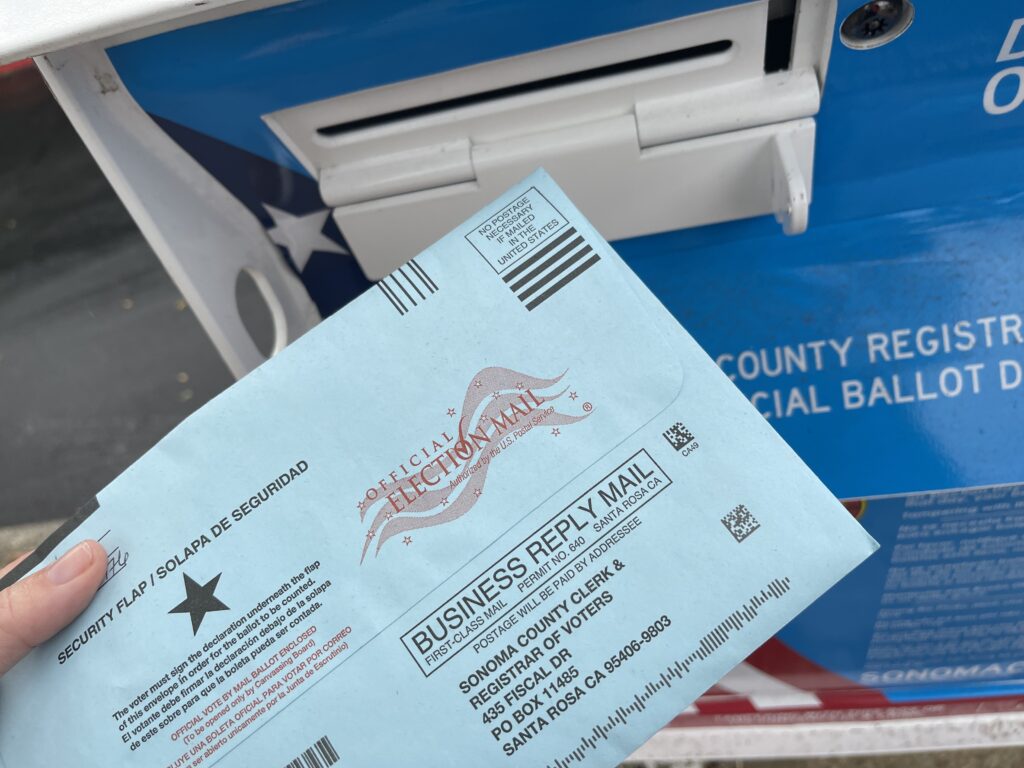

And let’s not overlook the wholesale changes to voting procedures that occurred in 2020 under the guise of the pandemic. Before the 2020 election, most Americans voted in person. However, that was not the case in 2020 as several states (including all of the vital swing states) sent tens of millions of absentee ballots based on inaccurate and outdated voter registration rolls.

Lastly, we can’t forget the reaction by Democrats after Donald Trump’s incredible upset of Hillary Clinton in the 2016 election. I strongly believe that many Democrats were so appalled with Trump’s unlikely victory in 2016 that they decided they will do anything, including committing election fraud, to prevent another Trump term. This is not conspiracy theory, there is actually abundant evidence pointing to this being the case.

Unfortunately, this series of events have led far too many Americans to lose confidence in the electoral process, as several polls confirm.

By no means is this meant to excuse the behavior of those who have decided that the electoral process is hopelessly corrupt, therefore they have a license to commit voter fraud. I am simply trying to understand why this dramatic shift in sentiment has occurred over such a short period of time.

Now, for the solutions. First and foremost, the mass mailing of absentee ballots based on unreliable voter registration rolls must cease. Second, “no excuse” absentee voting must be terminated. Third, for those who must vote by mail, a notary signature ought to be required. Fourth, ballot harvesting should be outlawed. And, fifth, in-person voting on Election Day should be the default mode of voting for the vast majority of Americans.

With less than 200 days before the monumental 2024 election, it is extremely unlikely that all of these solutions will be in place by the time voting begins. However, before these solutions are put in place, we need to have a robust societal discourse concerning the future of elections in this nation.

Once again, this is not a partisan issue. We do not want either side to lose faith in the electoral process. Once that Rubicon is crossed, there is no going back.

Photo by Karlis Dambrans. Attribution 2.0 Generic.