Global elites who cling to “green” policies cannot explain how the more than 341,000 wind turbines currently on the planet will make:

- The more than 6,000 products in our daily materialistic lives that did not exist before the 1800s, which are meeting the supply chain demands of today’s hospitals, airports, telecommunications, appliances, electronics, sanitation infrastructure, and heating and ventilating systems that support current lifestyles.

- The transportation fuels that move the heavy-weight and long-range needs of more than 50,000 jets moving people and products, and more than 50,000 merchant ships for global trade flows, and the military and space program to support life as we know it.

Wind turbines only generate electricity but cannot make any products for life as we know it.

The world populated from one to eight billion in less than 200 years from the more than 6,000 products and transportation fuels made from fossil fuels.

Today, we’re a materialistic society. Wind and solar cannot make EVs, or any of the products or fuels that are derived from fossil fuels that support:

- Hospitals

- Airports

- Militaries

- Medical equipment

- Telecommunications

- Communications systems

- Space programs

- Appliances

- Electronics

- Sanitation systems

- Heating and ventilating

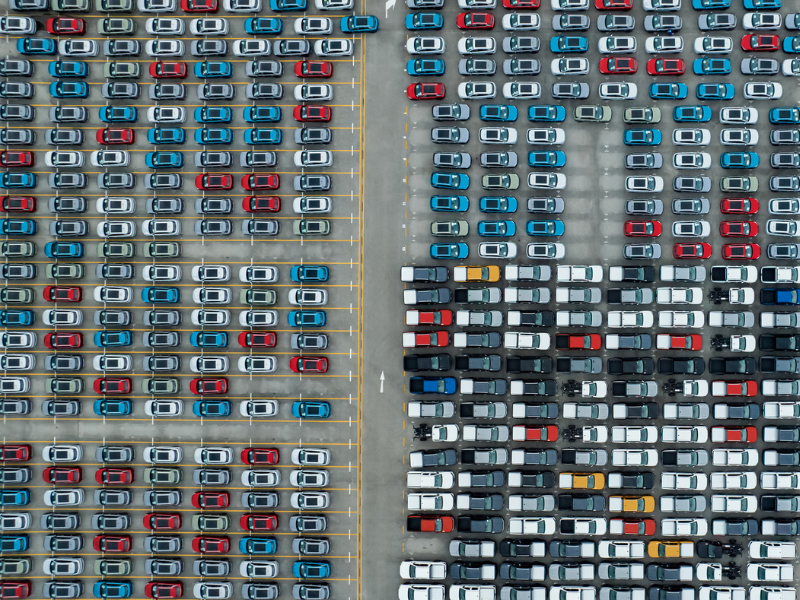

- Transportation – vehicles, rail, ocean, and air

- Construction – roads and buildings

- Nearly Half the World’s Population Relies on Synthetic Fertilizers Made from Fossil Fuels

With the help of hospitals, doctors, and medications, life longevity increased from 40 to 75+ during those 200 years.

Wind turbines and solar panels can only generate electricity but cannot make any of the products or transportation fuels derived from fossil fuels that supporting modern life.

EVs and ICE vehicles are virtually identical, i.e., the tires, insulation, wires, electronics, glass, etc. are all made from fossil fuels.

The key minerals in an EV battery are primarily from mining operations in China and Africa that exploit workers. Thus, the Pulitzer Prize Nominated book Clean Energy Exploitations argues it’s unethical and immoral to financially encourage China and Africa to continue exploiting workers, and inflict environmental degradation on those developing counties, just so wealthy countries can go “green.”

Inside the Congo, cobalt mines that exploit children were documented by this SKY NEWS 6-minute video.

The costs associated with installing wind turbines and solar panels are heavily dependent on the materials to build those renewables, which often come from those working in poverty in China and Africa using materials extracted with child labor in mines and facilities with minimal or no workplace safety or environmental safeguards.

There exists the “double standard” for reclamation for privately funded projects versus government-funded projects. Refinery sites need to be brought back to pristine conditions, while failed wind turbine installations are just abandoned or dumped into waste dumps sites.

Electricity came about after fossil fuels, as all the parts and components (wire, insulation, computers, glass) to generate electricity via coal, natural gas, hydro, nuclear, wind, or solar are made from fossil fuels.

Today’s transportation devices, trucks, airplanes, and container ships came aafter fossil fuels, as all the parts and components to build those vehicles, trucks, airplanes, and ships are made from fossil fuels and transportation fuels to move.

Ridding the world of fossil fuels would severely undermine electricity, transportation, and the infrastructure that did not exist 200 years ago.

Net Zero is not affordable for the more than six billion on this planet living in poverty.

- After the American capture of Nicolás Maduro in early January 2026, Venezuela has been receiving a lot of press the past few weeks.

- Of particular interest is that as of today, more than 90 percent of the 30 million Venezuelans, i.e. 27 million, live in poverty. Nearly 70 percent are stuck in extreme poverty. Shockingly, 80 percent of the eight billion on planet Earth, or more than six billion, live on less than $10/day. The 27 million Venezuelans represent only about 5 percent of those six billion living in poverty around the world.

In poor countries, millions of those in poverty die every year.

- From indoor air pollution from having to burn wood, charcoal, grass and dung because they don’t have natural gas, propane, or electricity for cooking and heating.

- From bacteria and parasites in their water and food because they don’t have electricity, water treatment, or refrigeration.

- From malaria and other diseases because their substandard clinics and hospitals lack electricity, clean water, sufficient vaccines and antibiotics, even window screens.

For affordable electricity, there are 460 coal plants under construction. Another 500 have been permitted or are about to be, with an additional 260 new plants expected to be announced. The vast majority of all this activity is in China and India.

Before we rid the planet of fossil fuels, we need to identify a viable replacement to support the supply chain of products and transportation fuels now demanded by all the infrastructures that did not exist 200 years ago. Wind turbines cannot make anything.

This reality does not deny the importance of environmental responsibility. Rather, it highlights the need for pragmatic frameworks that recognize material dependency, energy security, and global prosperity simultaneously. Sustainable progress must be grounded not in ideology, but in realistic transitions that preserve the foundations of modern civilization while improving environmental performance.