Nothing is more corrosive to a well-functioning republic than when the citizens lose faith in the electoral process. In fact, history is littered with numerous examples of once-thriving civilizations that went downhill rapidly once the sacred voting system had been irrevocably corrupted.

Some say something like this could never happen in the United States. While I wish this was the case, it is not. The United States, like every other nation that has existed before it, is indeed vulnerable to widespread election mischief.

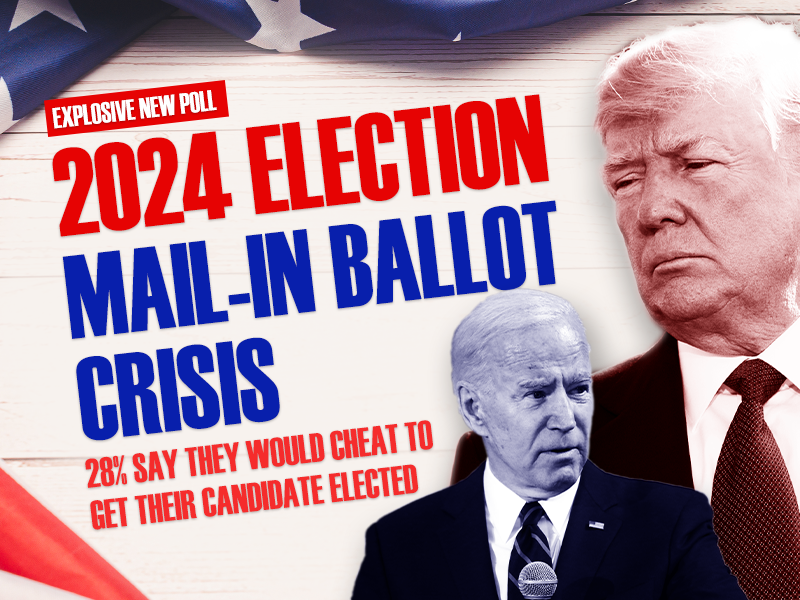

Take this brand-new poll from The Heartland Institute and Rasmussen Reports, in which 28 percent of all voters say they would engage in at least one kind of illegal voting practice in order to help their preferred candidate—either President Joe Biden or former President Donald Trump—win the 2024 election, as a sign of the times.

According to the poll, nearly 30 percent of American voters say they would engage in illegal voting activities in the upcoming election, such as voting in multiple states; destroying mail-in ballots of friends or family members; paying or offering rewards to fellow voters; deliberately providing incorrect information about the place, time, or date associated with casting a ballot; and/or altering candidate selections on mail-in ballots.

If these voters are being honest concerning their proclivity to engage in illegal voting, this means that approximately 44 million Americans would vote illegally in the 2024 election “to prevent the other side from winning.”

In other words, election integrity does not matter anymore. Winning elections, at any cost, is all that matters.

This should not come as a surprise to those who have been paying close attention to election procedures over the past two decades. While some say that the highly contested and controversial 2000 election was the genesis of this new ethos, I think the 2020 election was the true gamechanger.

Before the 2020 election, the vast majority of Americans had faith that elections were “run and administered well or somewhat well.” However, that changed in the months and days leading up to the 2020 election, when commonsense voting rules were upended under the guise of the pandemic.

The 2020 election was conducted unlike any other election in U.S. history, as states sent tens of millions of absentee ballots based on notoriously outdated and inaccurate voter registration rolls. As a result of this dramatic shift in voting procedures, illegal voting became much easier.

This is not speculation. According to a poll conducted in late 2023, 28.2 percent of Americans who voted by mail admitted to committing at least one kind of illegal voting act in the 2020 election. What’s more, it is likely that this flood of illegal mail-in votes in 2020 almost assuredly helped Joe Biden defeat Donald Trump, seeing as how Biden voters were twice as likely to vote by mail than Trump supporters.

Although the mainstream media and several federal government agencies tried to downplay the role of illegal mail-in voting in the 2020 election, it sure seems that most Americans, especially those who lean to the right, didn’t believe what they were being told.

Perhaps this is why so many Americans on both sides of the political aisle say they are willing to commit illegal voting acts to ensure that their preferred candidate is victorious in the pivotal 2024 election.

I understand why Republican voters might think that they must do anything to win in 2024 given the perilous state of the country and the fact that they assume that mass mail-in voting heavily favors Democrats.

However, that is highly flawed reasoning. Instead of stooping to the level of their opponents, GOP voters ought to take the higher road and abstain from engaging in any and all illegal voting activities.

Trust me, we don’t want to live in a nation in which the side that cheats the best ends up winning the election. That is a recipe for disaster.

The best possible solution is simple: ironclad election integrity. The best course of action to achieve robust election integrity is for state lawmakers to impose sound election rules that prevent the possibility of illegal voting from occurring in the first place. A few examples include preventing “no excuse” mail-in voting, requiring signature verification and/or a notarized signature on mail-in ballots, outlawing ballot harvesting, requiring able-bodied voters to vote in person, and imposing harsh penalties for those who vote illegally.

Deterring illegal voting as much as possible is the first step in saving America. After all, if elections aren’t free and fair, the will of the people means nothing.

Photo by Missvain. Creative Commons Attribution 4.0 International